SCAMP Summer of Coding

All the software I did over the summer for SCAMP

Recap

I had the hardware running the demo code and I was able to flash the QSPI chip on the board with the base operating system that is required to utilize the hardware. I was also able to make it past finals which was awesome!! Now over the summer I did a ton for this project but I did not log or keep track of what I did quite well so I will just summerize all the steps I took to get the software side of SCAMP. As such this will be a bit of a long post.

Setting up the Operating System

The operating system was relatively simple to put together since it was a matter of downloading the image from Google and flashing it onto the chip following the instructions provided by the vendor. That being said there were some changes I had to make to the operating system so that it would be able to run the way I wanted it to.

Setting up HotSpot

Since we tend to launch outdoors in the middle of nowhere, I wanted a way to connect to SCAMP without much trouble. Now the board did have an OTG USB port that allowed the MDT tool provided by Google to connect to the board, but this required a physical cable. This is a problem since I did not want to have to carry a cable around, especially when I am trying to debug the payload on a flight line.

As such, I wanted to be able to setup a hotspot on the board to connect to. The board did come with a broadcom chip that it uses for wifi connection so I just configured it to generate a hotspot on boot up.

Steps I took:

- First we need to generate the actual network

nmcli connection add type wifi ifname wlan0 con-name scampnet ssid "scampnet" mode ap ipv4.method shared

- Then add our static ip

nmcli connection modify scampnet ipv4.method manual ipv4.addresses 192.168.1.1/24

- This should bring the network up:

nmcli connection up scampnet

Configuring Storage

This one was pretty easy, I wanted to make sure all the data was saved on the sd card rather than the 8gb flash memory that is on the chip. This is because I wanted a way to recover data in the situation where the payload lands in a river or something. With an sd-card there is a higher chance of data recover than with a soldered on chip. To pull this off I make sure to have the operating system mount the sd card during the boot process and trigger an led if there is no sd card found.

Kicking of Flight Software

Finally I needed a way for the operating system to actually start up the flight software when the power was provided to the board. This way, setting up for launch would be less stressful and the payload would idealy “just work”. To do this I utilized a simple crontab task to spin up the flight software inside of a seperate process after everything had booted up.

Setting up Dev Enviornment

Dev Container

Now that the operating system was set up, I needed to make an environment for me to actually compile code with. To fix this, I ended up making a rather cursed dev container. First I pulled the .so file that was responsible for connecting to the TPU over PCIE from the board since the OS had come with that library packed. This way I would be able to actually link against the library and use the TPU with my code.

Then I tossed it into this container where I built a few more things:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

# Dockerfile for defining the container

from debian:buster

env DEBIAN_FRONTEND noninteractive

run dpkg --add-architecture armhf

run dpkg --add-architecture arm64

run apt-get update

run apt-get install -y \

build-essential crossbuild-essential-armhf crossbuild-essential-arm64 \

libjpeg-dev:arm64 libopencv-dev:arm64 wget

run wget https://github.com/Kitware/CMake/releases/download/v3.28.5/cmake-3.28.5-linux-aarch64.sh && \

chmod +x cmake-3.28.5-linux-aarch64.sh && ./cmake-3.28.5-linux-aarch64.sh --skip-license --prefix=/usr/local

run apt install git -y

run git clone https://github.com/tensorflow/tensorflow && \

cd tensorflow && \

git checkout a4dfb8d1a71385bd6d122e4f27f86dcebb96712d -b tf2.5

run mkdir tensorflow_build && cd tensorflow_build && \

cmake ../tensorflow/tensorflow/lite \

-DCMAKE_CXX_COMPILER=/usr/bin/aarch64-linux-gnu-g++ \

-DCMAKE_C_COMPILER=/usr/bin/aarch64-linux-gnu-gcc \

-DTFLITE_ENABLE_XNNPACK=OFF \

-DTFLITE_ENABLE_RUY=OFF \

-DTFLITE_ENABLE_NNAPI=OFF \

-DCMAKE_C_FLAGS="-march=armv8-a" \

-DCMAKE_CXX_FLAGS="-march=armv8-a" \

&& cmake --build . -j1

copy libedgetpu.so.1.0 /usr/lib/aarch64-linux-gnu/

Now I know that this isn’t the best container ever but it did the job for me so I ended up working with it.

Compiling software & deploying.

Now that I had a container to build with and I had the board fully stood up, it was time to put code on it. I wanted to use cFS for this project since I was still learning it at the time and I wanted to get more hands on with that project. So I started by setting up my toolchain file for the coral dev board, which turned out to be relatively trivial:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

# This example toolchain file describes the cross compiler to use for

# the target architecture indicated in the configuration file.

# Basic cross system configuration

SET(CMAKE_SYSTEM_NAME Linux)

SET(CMAKE_SYSTEM_VERSION 1)

SET(CMAKE_SYSTEM_PROCESSOR arm64)

# Specify the cross compiler executables

# Typically these would be installed in a home directory or somewhere

# in /opt. However in this example the system compiler is used.

SET(CMAKE_C_COMPILER "/usr/bin/gcc")

SET(CMAKE_CXX_COMPILER "/usr/bin/g++")

# Configure the find commands

SET(CMAKE_FIND_ROOT_PATH_MODE_PROGRAM NEVER)

SET(CMAKE_FIND_ROOT_PATH_MODE_LIBRARY NEVER)

SET(CMAKE_FIND_ROOT_PATH_MODE_INCLUDE NEVER)

# These variable settings are specific to cFE/OSAL and determines which

# abstraction layers are built when using this toolchain

SET(CFE_SYSTEM_PSPNAME "pc-linux")

SET(OSAL_SYSTEM_OSTYPE "posix")

Using that tool chain file, I was able to configure the cFS to actually run on the board. Once I had the whole flow working, I automated everything through bash scripts and then got everything working.

Flight software version 1

Since I was working on hardware at the same time along with my jobs, I aimed to make my first iteration of flight software as simple as possible so that I can get something flying on the first launch of September. As such I put together this simple architecture to fly.

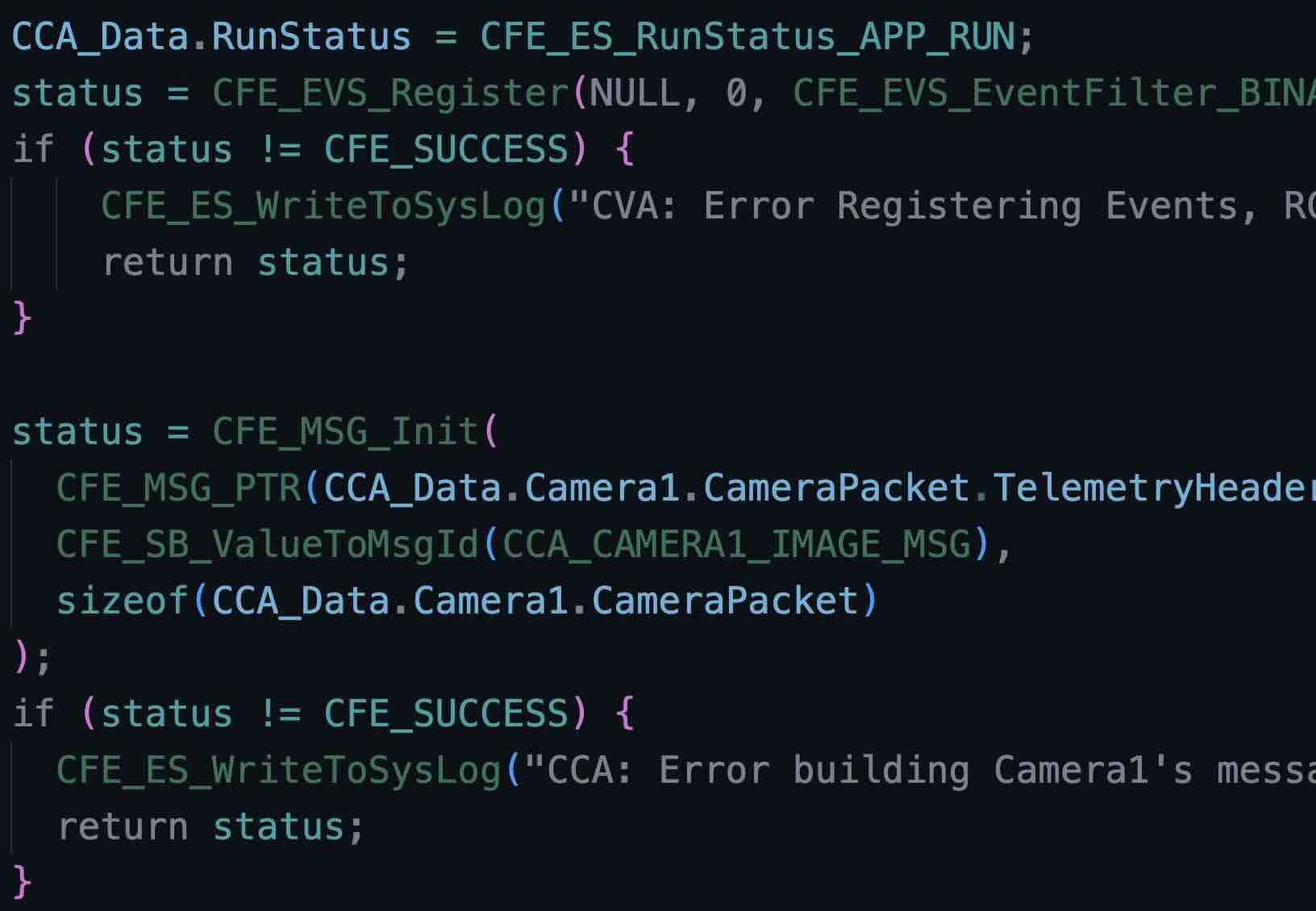

Leveraging most of the opensource components and built in features of NASA cFS, I was able to spin up the flight system rather quickly. Going into the applications themselves, there is: Sch (the scheduler managing the system); all the components of the cFE are executive applications managing the system; TA (app monitioring system temperature); and finally CVA (the computer vision application). TA was rather trivial since it simple read data from the kernel that reported the temperature of the processing units and dumped the data into a csv file saved on the sd card.

The CVA application on the other hand was rather complex, for this build atleast. This application practically was the entire payload. Below is the flow diagram for how the application works.

flowchart TD

A([Init])

B[Pend on Software Bus]

BB{CVA Wakeup message}

C{cFE Terminate Signal}

D[/Camera Image/]

E[[Save Image to sdcard]]

EE[[Send Image to TPU]]

EEE[[Output from TPU]]

EEEE[[Save Output to sdcard]]

EEEEE[Join]

F([Cleanup])

A --> B --> BB

BB -- yes --> D

BB -- no --> B

D --> E --> EEEEE

D --> EE -- after processing --> EEE --> EEEE --> EEEEE

EEEEE --> C

C -- no --> B

C -- yes --> F

Now for flight I made the scheduler send the wakeup message every 33ms which let me get a framerate of 30 frames per second. For actually interfacing with the TPU on the system, I leveraged google’s tensorflow lite library to link everything into cFS and put together a library called coral_lib to carry all the code for managing the flow of data between the TPU and the CPU.

Next Steps

Now that I had the flight software complete, it was time to build a nerual network to actually fly!!