Purpose

Waldo is a very elusive character that has always been a pain for me to find since the art hurts my eyes. So to compensate, I figured that I would just make a computer see for me and find me Waldo so I do not have to. I used a techinque called transfer learning to pull this off. My preprocess model is inception v3 and the final model that I choose to use for classification was a support vector machine. For my support vector machine I used a linear kernel and adjusted the class weights to compensate for the fact that I have a small amount of Waldos examples. As such I built this program to solve where is Waldo for me.

Approach

Breaking the Problem

At the time of creating this project, I only knew how to

create models to conduct image classification. As such I

needed to come up with a way to translate the problem into a

classification problem rather than and image segmantation

problem. I achieved this by adding a preproccessing step to my

pipeline.

Rather than dealing with the image as a whole I decided to

deal with 64x64 patches of the image. I created a sliding

window of size 64x64 and had it slide across the image to

create my patches. Then I would then run my model to see if

Waldo exists within these patches. If my model states that

Waldo is not inside a patch it will return a 0, otherwise it

would return a 1. If the reconstructor finds the 0, it will

shade in that patch. If it finds a 1, it will leave the patch

as is. Using this techinque I am able to shade in all the

places my model thinks Waldo isn't and leave the places where

it thinks Waldo is untouched.

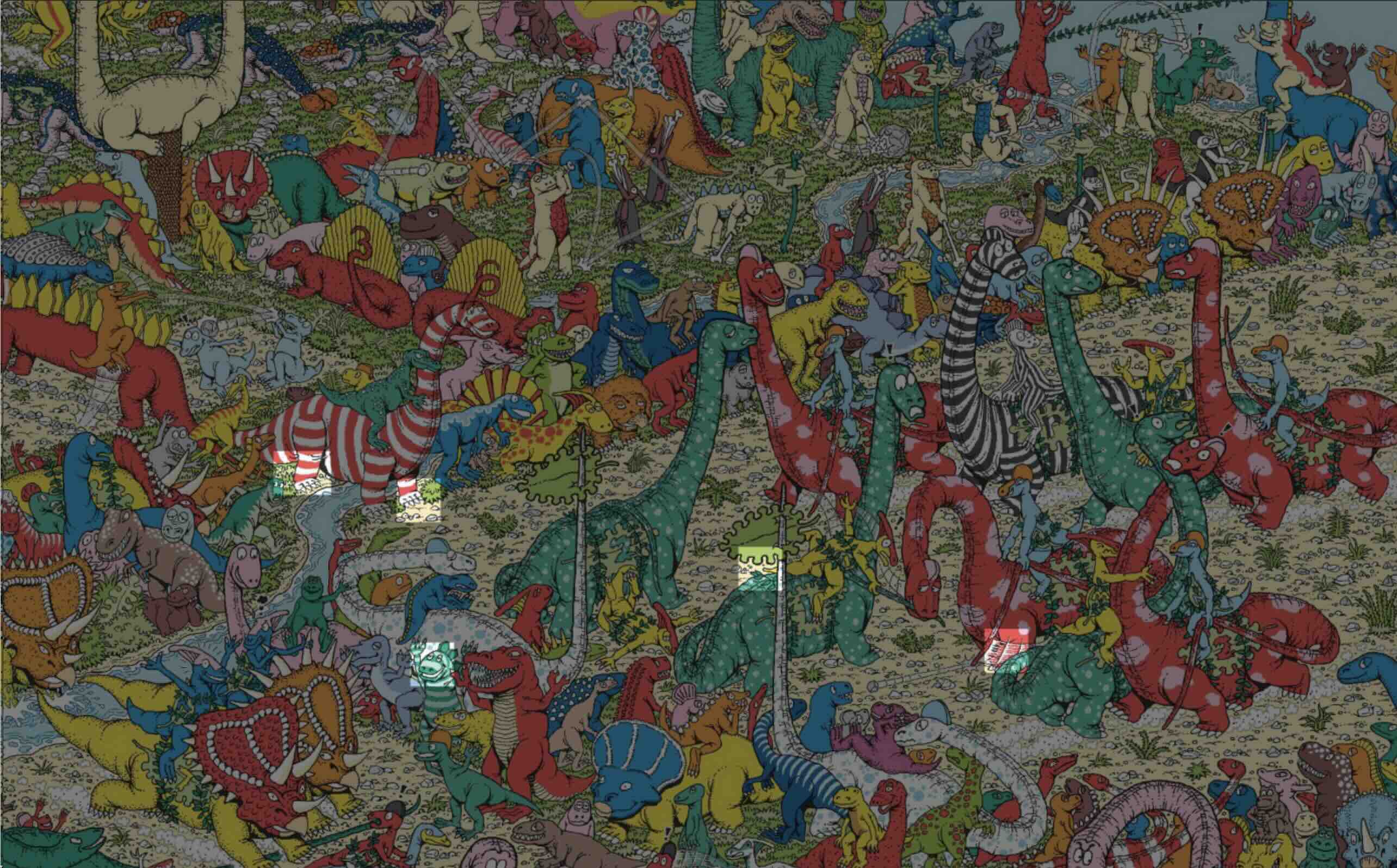

While it is a very simple approach for the complex

problem of finding Waldo, it is by no means perfect or the

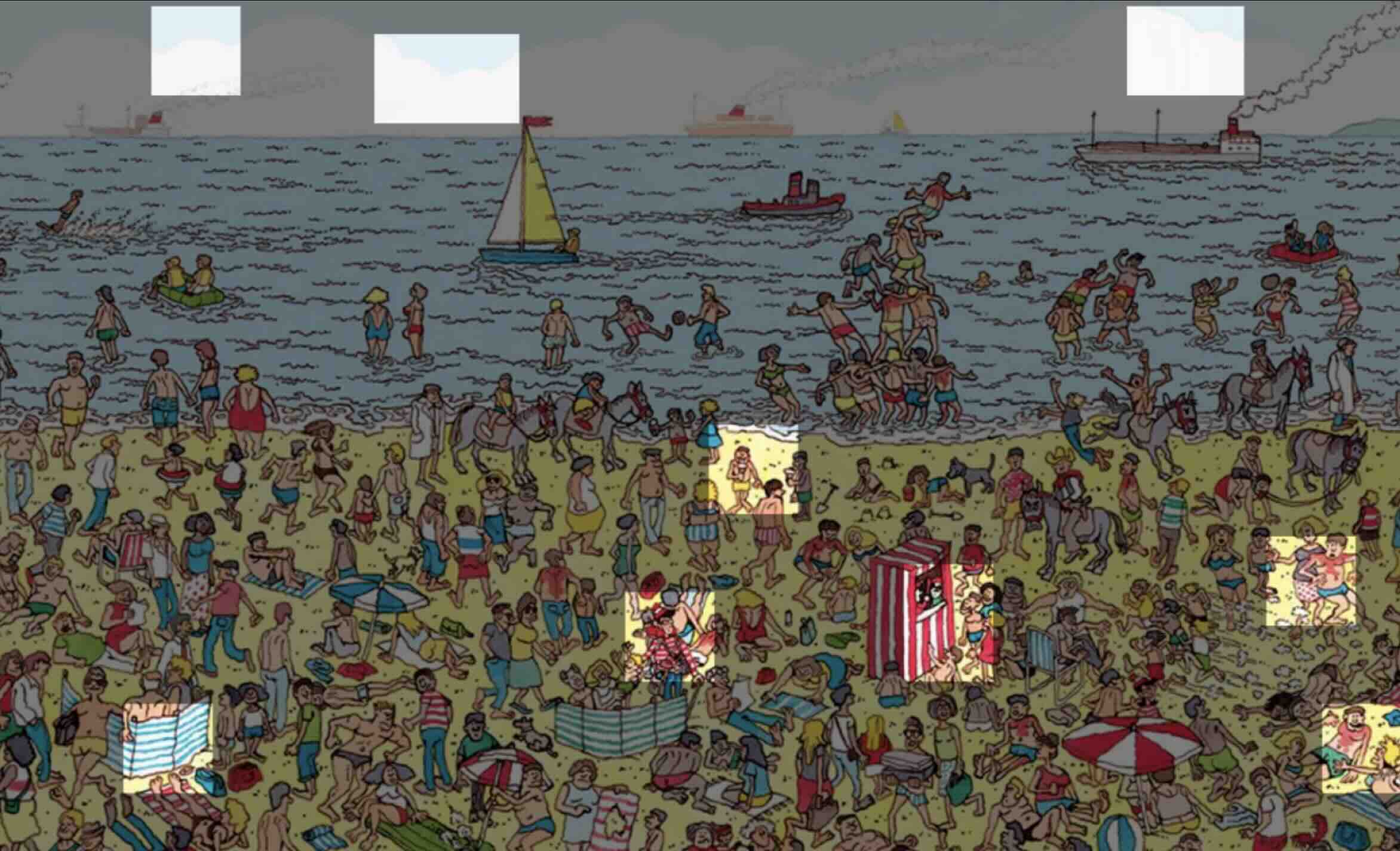

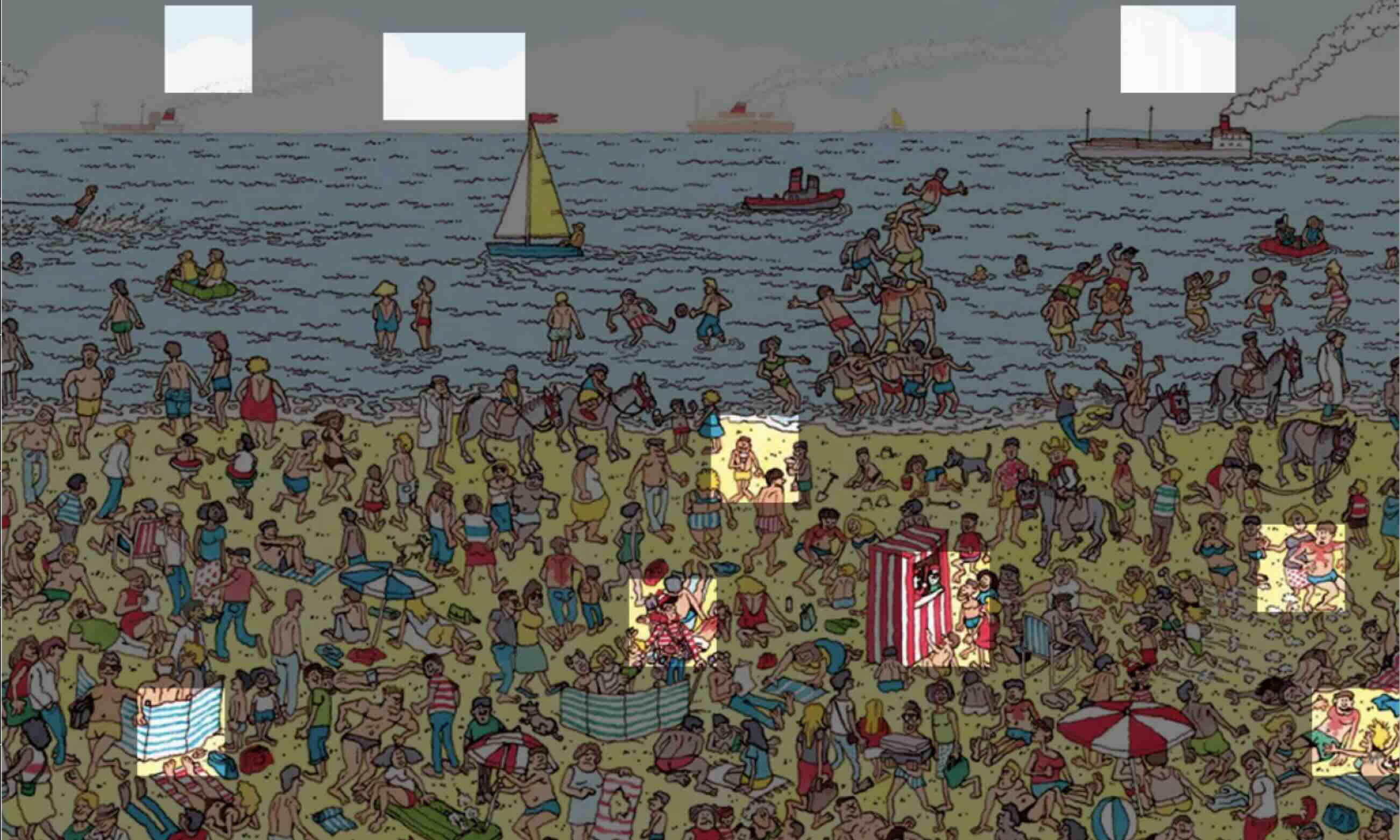

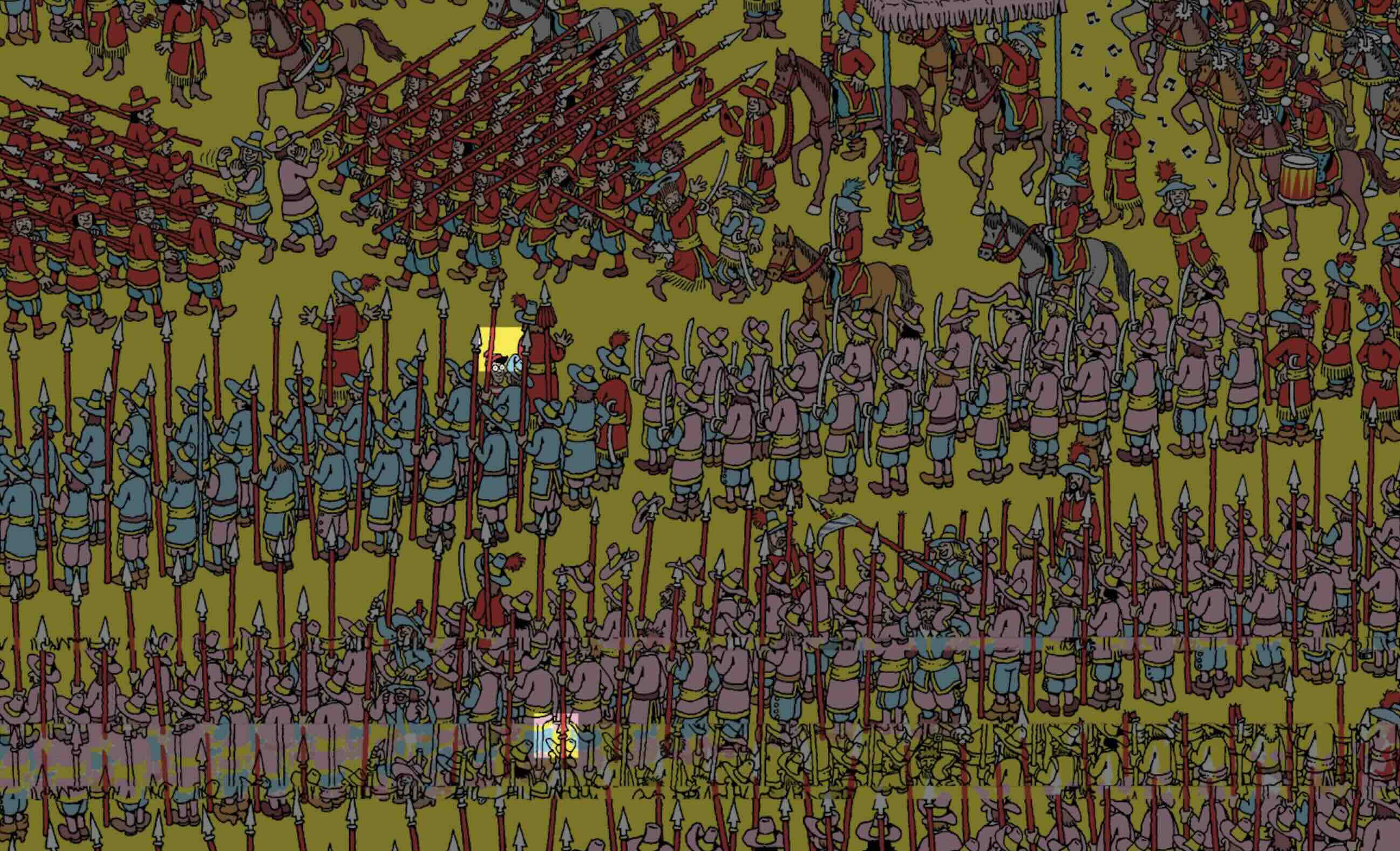

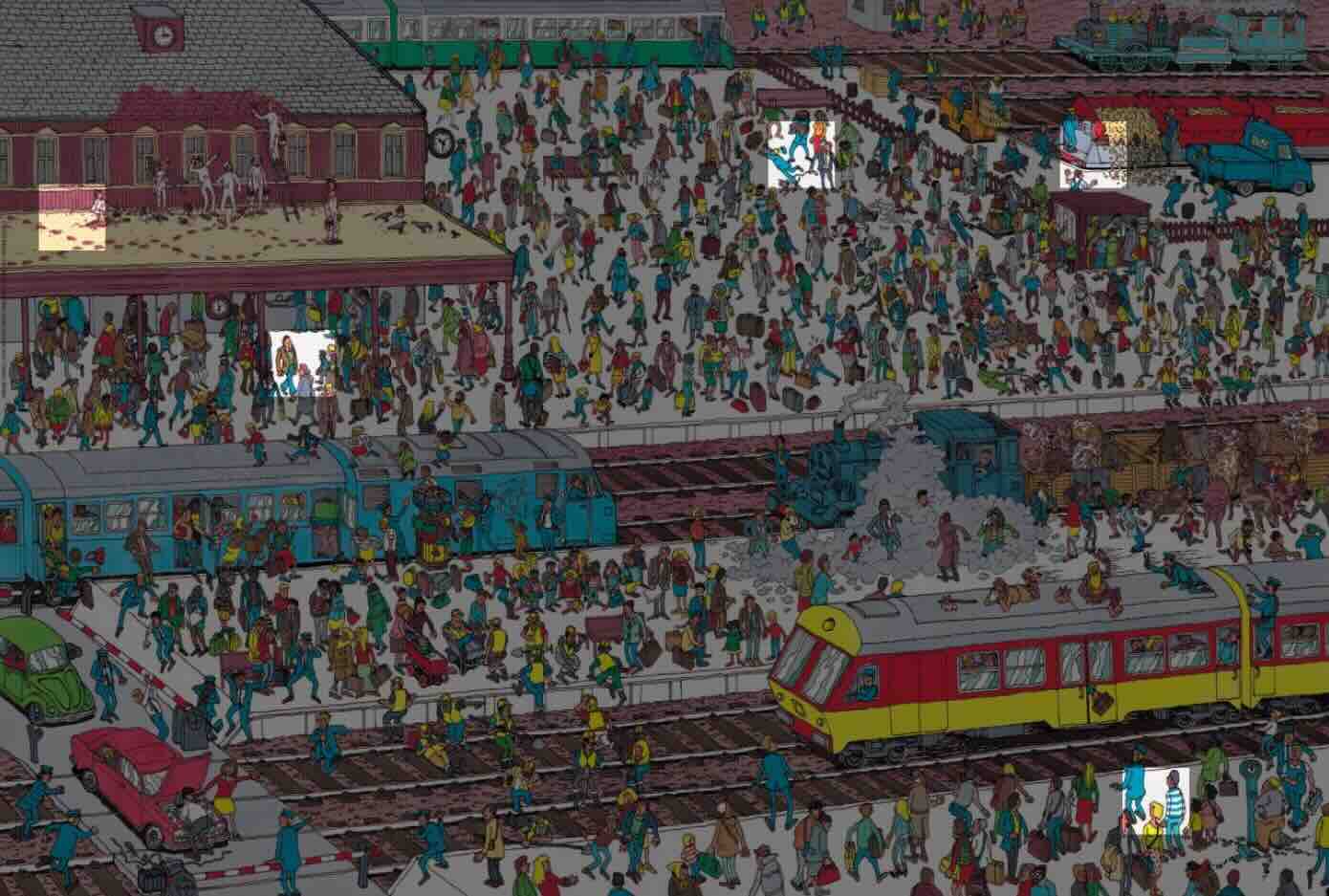

best. As shown by the examples below there the model clearly

has much to learn. It has a tendency to search for very

specific patterns and gives a lot a false positives.

Model Diagram

The diagram above depicts the structure of the pipeline.

Successful Examples

Failure Examples

Takeaways

Lessons and Mistakes

- It is way easier to break the image before hand and then run the images as batches through the model. It saves so much time and process power.

- When training support vector machines, make sure to use a linear kernal since it overfits the least. Especially in the case where there are very few positive cases.

- Save models and data as pickle files to save time and avoid having to redo your work when taking breaks. Also back up your models so that you can always go backwards

- When working with datasets with a small number of positive samples compared to negative samples, be sure to weight your data to offset the difference in sample sizes.

- Always train big models with a GPU!!! I wasted too much time training on a cpu.

Future Work

In the future, if I ever do get time to revisit this project I

will want to try and play with the model that lives on top of

the inception embedding extractor. Perhaps exchange the

support vector machine for a few fully connected layers and

try and leverage deep learning tricks to get a better outcome.

I would also like to play around with the overlap values for

when I am sampling my 64x64 images. In this project as is, I

went for an overlap of 0.7(which means that 70% of the new

64x64 grid would be overlapping with the previous grid).

Tuning the overlap constant could be a way to boost accuracy

and improve my Waldo tracking.

Finally I would like to try and play around with the image

itself and see what would happen if I were to take out all

color or transform it in some way. I could even try increase

the patch sizes from 64x64 to 128x128 or 32x32.

DataSet used:

https://www.kaggle.com/datasets/residentmario/wheres-waldo

Email me for source code